- Authors

- Written by :

- Name

- Varun Kumar

Selecting the right tech stack for your next microservice

- Published on

- Published On:

Choosing the proper tech stack for microservices is crucial because it directly impacts the scalability, maintainability, and performance of the application. Microservices architecture inherently involves breaking down an application into smaller, independent services, each with its own responsibilities. However, over-engineering the tech stack at the beginning—by adopting overly complex tools or unnecessary abstractions—can lead to increased development time, higher operational costs, and difficulty in managing the system. This often defeats the purpose of building microservices*, as the architecture becomes cumbersome rather than agile, ultimately hindering the ability to deliver value quickly and adapt to changing requirements.

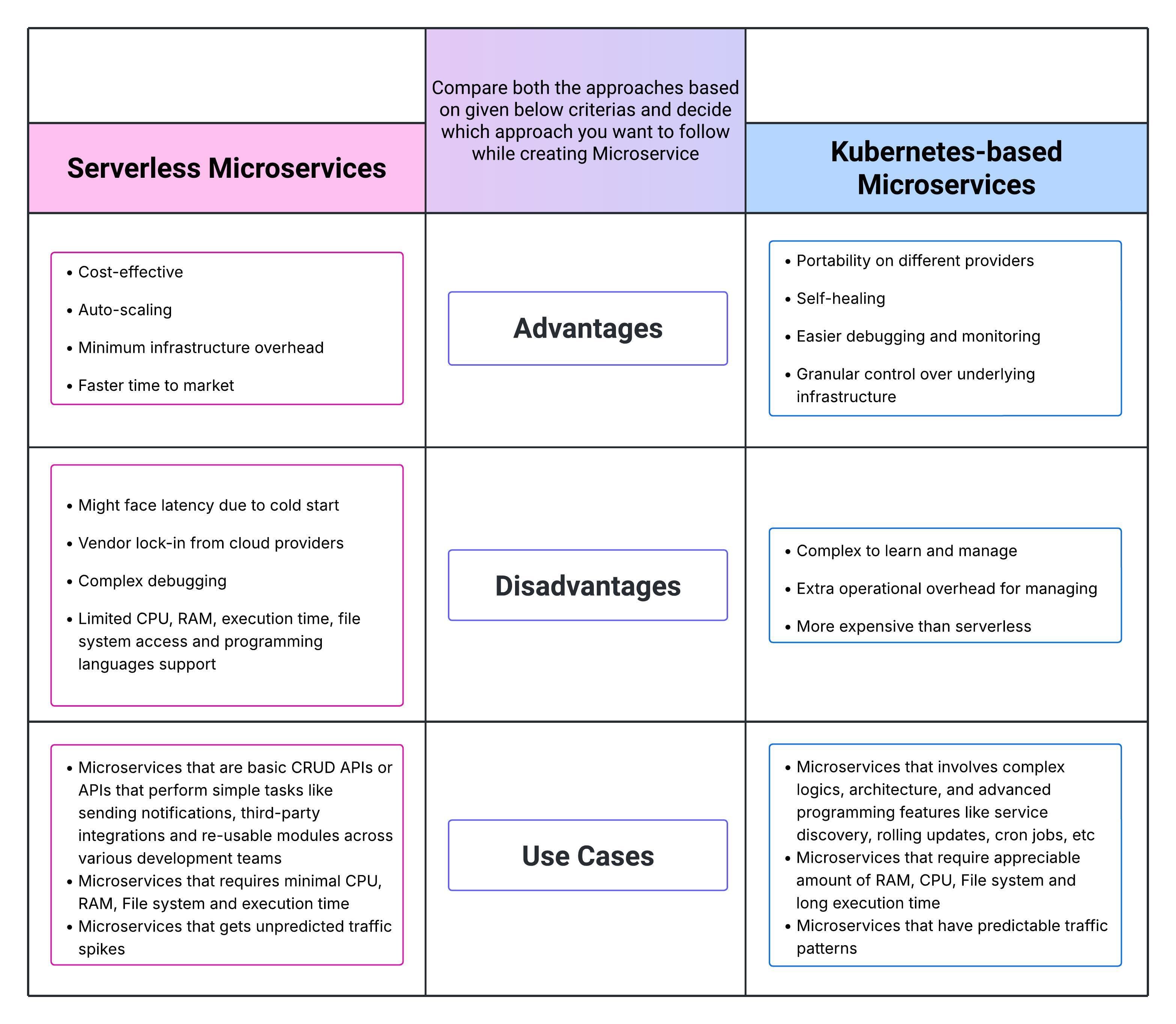

Time to time developers and architects have to make decisions about choosing the right tech stack they want to use for microservices. I wrote this blog to help you clear this exact confusion. We will discuss the two main platforms where you can build your microservices: Creating Serverless Microservices and Creating Kubernetes-based Microservices. We will also discuss why choosing a hybrid approach between the two platforms is a good idea.

Creating Serverless Microservices

We will not be covering what are serverless technologies in this blog, but in short, Serverless technologies allow developers to build and run applications without managing the underlying infrastructure, as the cloud provider automatically handles server provisioning, scaling, and maintenance.

Available serverless services from various cloud providers

| Provider | Service Name | Link for Further Reading |

|---|---|---|

| AWS | AWS Lambda | AWS Lambda Documentation |

| Microsoft Azure | Azure Functions | Azure Functions Documentation |

| Google Cloud | Google Cloud Functions | Google Cloud Functions Documentation |

| IBM Cloud | IBM Cloud Functions | IBM Cloud Functions Documentation |

| Cloudflare | Cloudflare Workers | Cloudflare Workers Documentation |

| Netlify | Netlify Functions | Netlify Functions Documentation |

| Vercel | Vercel Functions | Vercel Functions Documentation |

| Firebase | Firebase Cloud Functions | Firebase Cloud Functions Documentation |

There might be more providers offering serverless services, but the above list covers the most popular ones. Each of these providers has its own set of features, pricing models, and limitations, so it's essential to evaluate them based on your specific use case and requirements.

Advantages of using Serverless Platforms

- Cost-Effectiveness: You only pay for the compute time you consume, which can lead to significant cost savings, especially for applications with variable workloads.

- Automatic Scaling: Serverless platforms automatically scale your application based on demand, eliminating the need for manual intervention.

- Reduced Operational Overhead: The cloud provider manages the underlying infrastructure, allowing developers to focus on writing code rather than managing servers.

- Faster Time to Market: Serverless architectures enable rapid development and deployment, allowing teams to iterate quickly and deliver features faster.

Disadvantages of using Serverless Platforms (and their solutions)

- Cold Start Latency: Serverless functions may experience latency during cold starts, which can impact performance for certain applications. (Possible solution: Use provisioned concurrency (AWS Lambda) or warm-up strategies to keep functions warm and reduce cold start times).

- Vendor Lock-In: Relying heavily on a specific serverless provider can lead to vendor lock-in, making it challenging to migrate to another platform.

- Limited Execution Time: Most serverless platforms impose limits on the execution time of functions, which may not be suitable for long-running tasks. (Possible solution: Break down long-running tasks into smaller, manageable functions or use alternative services for long-running processes.)

- Complexity in Debugging: Debugging serverless applications can be more complex due to the distributed nature of the architecture and the lack of direct access to the underlying infrastructure. (Possible solution: Use logging and monitoring tools to gain insights into function execution and performance, and consider using local development environments for testing.)

- State Management: Serverless functions are stateless by design, which can complicate state management and data persistence in certain applications. (Possible solution: Use external storage solutions like databases or caching services to manage state and data persistence.)

- Limited Language Support: Some serverless platforms may have limited support for certain programming languages or frameworks, which can restrict development choices. (Possible solution: Choose a serverless platform that supports the languages and frameworks you prefer, or consider using custom runtimes or containers to extend language support.)

- Limited file system access: Serverless functions typically have limited access to the file system, which can be a limitation for certain applications that require extensive file operations. (Possible solution: we have to use cloud storage services like AWS S3, Google Cloud Storage, or Azure Blob Storage for file storage and retrieval.)

Business Use Cases for Serverless Microservices

- Ideal for use cases requiring minimal RAM, CPU, file system access, and execution time, making it a great choice for simple CRUD operations, webhooks, and lightweight APIs.

- Perfect for scenarios with unpredictable traffic spikes, where automatic scaling is essential to manage demand efficiently without incurring excessive costs.

Choosing Tech Stack for Serverless Microservices

| Concern | Suitable Technologies and Frameworks | Notes |

|---|---|---|

| API Gateway | AWS: API Gateway, Azure: Azure API Management (APIM), Google Cloud: Google Cloud Endpoints | These services provide a unified interface for accessing multiple microservices from a common host name, enabling easier management, common authentication checks and applying common security checks in the gateway before the traffic is directed to the function for execution. |

| Programming language | Node.js, Python, Java, Go, PHP, .NET Core | Choose a technology and programming language that aligns with your team's expertise and the specific requirements of your microservices. |

| Choice of framework | Node.js: Express, Python: Flask, Java: Spring Boot, Go: Gin, PHP: Lumen, CodeIgniter, .NET Core: ASP.NET Core | Due to function's size limitations, try to choose a framework that has small footprint and has ability to fulfil your project needs. |

| Infrastructure as Code (IaC) Frameworks | SST (Serverless Stack), AWS CDK (Cloud Development Kit), Serverless Framework | Infrastructure as Code (IaC) frameworks are specifically tailored frameworks that helps in building and deploying serverless applications along with provisioning the cloud infrastructure and cloud resources needed for the microservices. |

Creating Kubernetes-based Microservices

Kubernetes is an open-source container orchestration platform that provides a robust framework for managing microservices architectures, allowing developers to deploy and manage applications in a consistent and efficient manner. It also provides features like scaling, load balancing, and self-healing for your microservices.

Available Kubernetes services from various cloud providers

| Provider | Service Name | Link for Further Reading |

|---|---|---|

| AWS | Amazon EKS | Amazon EKS Documentation |

| Microsoft Azure | Azure Kubernetes Service (AKS) | Azure AKS Documentation |

| Google Cloud | Google Kubernetes Engine (GKE) | Google GKE Documentation |

| IBM Cloud | IBM Cloud Kubernetes Service | IBM Cloud Kubernetes Documentation |

| DigitalOcean | DigitalOcean Kubernetes | DigitalOcean Kubernetes Documentation |

| Red Hat | OpenShift | OpenShift Documentation |

| VMware | Tanzu Kubernetes Grid | Tanzu Kubernetes Grid Documentation |

| Oracle Cloud | Oracle Container Engine for Kubernetes (OKE) | Oracle OKE Documentation |

| Alibaba Cloud | Alibaba Cloud Container Service for Kubernetes (ACK) | Alibaba ACK Documentation |

| Linode | Linode Kubernetes Engine (LKE) | Linode LKE Documentation |

| Vultr | Vultr Kubernetes Engine | Vultr Kubernetes Documentation |

| Render | Render Kubernetes | Render Kubernetes Documentation |

| Cloudflare | Cloudflare Kubernetes | Cloudflare Kubernetes Documentation |

Advantages of using Kubernetes

- Portability: Kubernetes can run on various environments, including on-premises, public cloud, and hybrid cloud setups, making it easier to move microservices across different providers.

- Scalability: Kubernetes can automatically scale microservices based on demand, ensuring optimal resource utilization and performance.

- Self-Healing: Kubernetes can automatically restart, reschedule, or replace containers that fail, ensuring high availability and reliability of microservices.

- More control over the underlying infrastructure: Kubernetes provides more control over the underlying infrastructure compared to serverless platforms, allowing for custom configurations and optimizations.

- Easier debugging and monitoring: Kubernetes provides better tools for debugging and monitoring microservices, making it easier to identify and resolve issues.

Disadvantages of using Kubernetes

- Complexity: Kubernetes can be complex to set up and manage, requiring a steep learning curve for developers and operations teams.

- Operational Overhead: Managing Kubernetes clusters requires additional operational overhead, including monitoring, scaling, and maintaining the underlying infrastructure.

- Cost: Running Kubernetes clusters can be more expensive than serverless platforms, especially for small applications with low traffic.

- Resource Management: Kubernetes requires careful resource management to ensure optimal performance, which can be challenging for teams without experience in container orchestration.

Business Use Cases for Kubernetes-based Microservices

- Suitable for applications with complex logics and architecture, requiring advanced features like service discovery, load balancing, and rolling updates.

- Ideal for applications with predictable traffic patterns and resource requirements, where the overhead of managing Kubernetes is justified by the need for control and customization.

- Best for applications with long-running processes or tasks that require more control over the underlying infrastructure and resource management.

Choosing Tech Stack for Kubernetes-based Microservices

In most of the cases, the tech stack you choose will depend on your specific use case, team expertise, and project requirements. However, here are some common technologies and frameworks that are often used in conjunction with Kubernetes:

| Concern | Suitable Technologies and Frameworks | Notes |

|---|---|---|

| API Gateway | AWS: API Gateway, Azure: Azure API Management (APIM), Google Cloud: Google Cloud Endpoints | These services provide a unified interface for accessing multiple microservices from a common host name, enabling easier management, common authentication checks and applying common security checks in the gateway before the traffic is directed to the function for execution. |

| Programming language | Almost any programming language | Kubernetes is a highly flexible technology and can work with almost any programming language. |

| Choice of framework | Any framework of your choice | Frameworks that have complex dependencies might pose challenges when running on Kubernetes. |

| Containerization | Docker | Docker is the most widely used containerization platform, allowing you to package your microservices and their dependencies into containers for easy deployment and management in Kubernetes. |

| Service mesh | Istio, Linkerd, Consul | Service meshes provide advanced traffic management, security, and observability features for microservices running in Kubernetes. |

| Monitoring and logging | Prometheus, Grafana, ELK Stack (Elasticsearch, Logstash, Kibana) | Monitoring and logging tools help you track the performance and health of your microservices running in Kubernetes. |

| Message broker | RabbitMQ, Apache Kafka, NATS | Message brokers facilitate communication between microservices, enabling asynchronous messaging and event-driven architectures. |

| Caching | Redis, Memcached | Caching solutions help improve the performance of your microservices by storing frequently accessed data in memory. |

Hybrid Approach (Serverless + Kubernetes)

Just like not all parts of a software application are equally complex, important and large, creating all microservices in one way (Serverless or Kubernetes-based) is not a good idea. A hybrid approach allows you think individually about what that specific microservice is doing and how it is doing it. Learning the skill to leverage the strengths of both serverless and Kubernetes-based microservices, you can create a more efficient and cost-effective holistic architecture. Here are some examples of when to use each approach:

| Concern | Serverless | Kubernetes-based |

|---|---|---|

| Simple CRUD operations | ✅ | ❌ |

| Long-running processes | ❌ | ✅ |

| Event-driven architectures | ✅ | ❌ |

| Complex microservices with multiple dependencies | ❌ | ✅ |

| High traffic spikes | ✅ | ❌ |

| Predictable traffic patterns | ❌ | ✅ |

Conclusion

The sole purpose of this blog is list down most popular approaches, their advantages, disadvantages, how to figure out which business use case your requirements fall into & how to choose the right tech stack for your microservices. By understanding the strengths and weaknesses of each approach, I hope you can make informed decisions that align with your business goals and technical needs.